pip install torch torchvision

我们将使用一个包含长文本和对应摘要的数据集进行模型训练。你可以根据实际需求选择合适的文本摘要数据集。

import torchfrom torch.utils.data import Dataset, DataLoaderfrom torch.nn.utils.rnn import pad_sequencefrom nltk.tokenize import word_tokenizeimport stringclass TextSummaryDataset(Dataset): def __init__(self, texts, summaries): self.texts = texts self.summaries = summaries def __len__(self): return len(self.texts) def __getitem__(self, idx): return self.texts[idx], self.summaries[idx]# 使用示例数据texts = ["This is a sample text for summarization.", "PyTorch is an open-source deep learning library.", "Natural language processing involves understanding and analyzing human language."]summaries = ["Sample text summarization example.", "PyTorch is a deep learning library.", "NLP analyzes human language."]# 创建数据集实例dataset = TextSummaryDataset(texts, summaries)步骤 3:文本处理与Tokenization

我们需要将文本数据转换为模型可接受的格式。这包括文本分词(tokenization)、去除标点符号等处理。

def preprocess_text(text): # 分词并去除标点符号 tokens = word_tokenize(text) tokens = [token.lower() for token in tokens if token.isalpha()] return tokens# 处理示例数据processed_texts = [preprocess_text(text) for text in texts]processed_summaries = [preprocess_text(summary) for summary in summaries]步骤 4:构建Seq2Seq模型

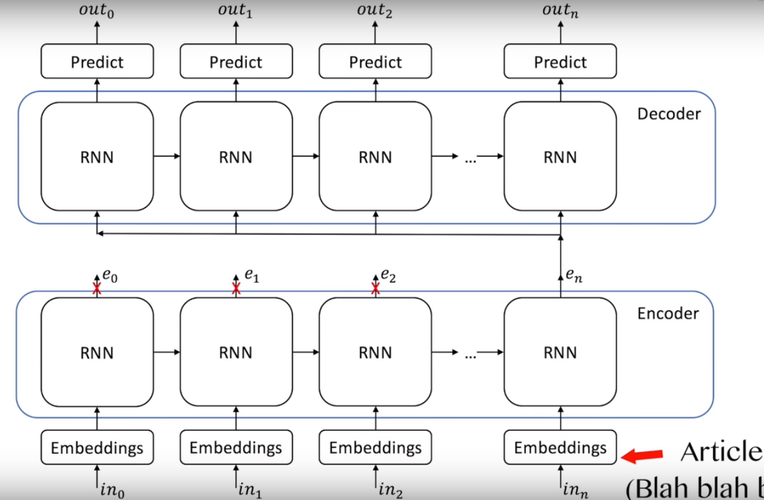

我们将使用Seq2Seq模型,其中包括编码器(Encoder)和解码器(Decoder)。

import torch.nn as nnimport torch.optim as optimclass Encoder(nn.Module): def __init__(self, input_size, embedding_size, hidden_size, num_layers=1): super(Encoder, self).__init__() self.embedding = nn.Embedding(input_size, embedding_size) self.rnn = nn.GRU(embedding_size, hidden_size, num_layers) def forward(self, x): embedded = self.embedding(x) output, hidden = self.rnn(embedded) return output, hiddenclass Decoder(nn.Module): def __init__(self, output_size, embedding_size, hidden_size, num_layers=1): super(Decoder, self).__init__() self.embedding = nn.Embedding(output_size, embedding_size) self.rnn = nn.GRU(embedding_size, hidden_size, num_layers) self.fc = nn.Linear(hidden_size, output_size) def forward(self, x, hidden): x = x.unsqueeze(0) embedded = self.embedding(x) output, hidden = self.rnn(embedded, hidden) prediction = self.fc(output.squeeze(0)) return prediction, hidden# 示例模型创建input_size = len(processed_texts) # 根据实际情况确定output_size = len(processed_summaries) # 根据实际情况确定embedding_size = 128hidden_size = 256encoder = Encoder(input_size, embedding_size, hidden_size)decoder = Decoder(output_size, embedding_size, hidden_size)# 定义损失函数和优化器criterion = nn.CrossEntropyLoss()encoder_optimizer = optim.Adam(encoder.parameters(), lr=0.001)decoder_optimizer = optim.Adam(decoder.parameters(), lr=0.001)步骤 5:训练Seq2Seq模型

from tqdm import tqdmdef train_seq2seq_model(encoder, decoder, dataset, criterion, encoder_optimizer, decoder_optimizer, num_epochs=5): for epoch in range(num_epochs): total_loss = 0 for text, summary in tqdm(dataset): # 将文本和摘要转换为模型输入所需格式 text_tokens = torch.tensor([word2index[word] for word in text], dtype=torch.long) summary_tokens = torch.tensor([word2index[word] for word in summary], dtype=torch.long) # 模型训练 encoder_optimizer.zero_grad() decoder_optimizer.zero_grad() # 编码器 encoder_output, encoder_hidden = encoder(text_tokens) # 解码器输入,初始为起始符"<sos>" decoder_input = torch.tensor(word2index["<sos>"], dtype=torch.long) decoder_hidden = encoder_hidden # 解码器输出序列 predicted_sequence = [] for _ in range(len(summary_tokens)): prediction, decoder_hidden = decoder(decoder_input, decoder_hidden) predicted_sequence.append(prediction) # 使用教师强制,将下一个时刻的输入设为真实的摘要单词 decoder_input = summary_tokens[_] # 计算损失 predicted_sequence = torch.stack(predicted_sequence, dim=0) loss = criterion(predicted_sequence, summary_tokens) total_loss += loss.item() # 反向传播和优化 loss.backward() encoder_optimizer.step() decoder_optimizer.step() print(f"Epoch [{epoch+1}/{num_epochs}], Loss: {total_loss / len(dataset)}")# 实际训练过程word2index = {word: idx for idx, word in enumerate(set([word for sent in processed_texts+processed_summaries for word in sent]))}index2word = {idx: word for word, idx in word2index.items()}# 构建数据集dataset = TextSummaryDataset(processed_texts, processed_summaries)# 调用训练函数train_seq2seq_model(encoder, decoder, dataset, criterion, encoder_optimizer, decoder_optimizer, num_epochs=5)步骤 6:生成摘要

def generate_summary(encoder, decoder, text, max_length=50): # 将输入文本转换为模型输入所需格式 text_tokens = torch.tensor([word2index[word] for word in preprocess_text(text)], dtype=torch.long) # 编码器 encoder_output, encoder_hidden = encoder(text_tokens) # 解码器输入,初始为起始符"<sos>" decoder_input = torch.tensor(word2index["<sos>"], dtype=torch.long) decoder_hidden = encoder_hidden # 解码器生成序列 summary = [] for _ in range(max_length): prediction, decoder_hidden = decoder(decoder_input, decoder_hidden) predicted_word_index = torch.argmax(prediction).item() summary.append(index2word[predicted_word_index]) # 如果生成终止符"<eos>",则停止生成 if predicted_word_index == word2index["<eos>"]: break # 下一个时刻的输入 decoder_input = torch.tensor(predicted_word_index, dtype=torch.long) return ' '.join(summary)# 示例生成摘要input_text = "This is a sample text for summarization."generated_summary = generate_summary(encoder, decoder, input_text)print("Original Text:", input_text)print("Generated Summary:", generated_summary)

这个项目中,我们通过构建 Seq2Seq 模型进行文本摘要生成。你可以根据实际需求选择更大的模型、更大的数据集,并进行更多的训练迭代,以获得更好的摘要生成效果。